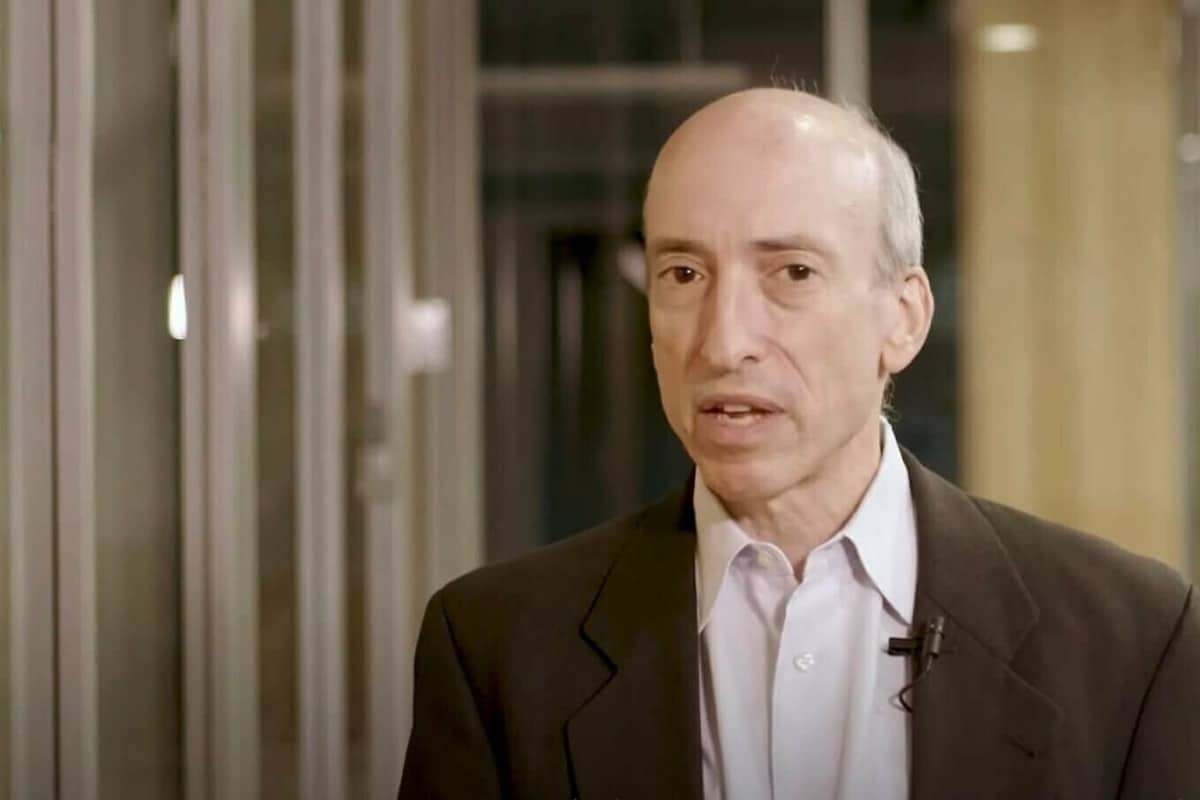

Securities and Exchange Commission (SEC) Chair Gary Gensler has previously warned that artificial intelligence (AI) could lead to the next financial crisis. Gensler once again elaborated on this topic during a virtual fireside chat hosted by the non-profit advocacy organization Public Citizen on Jan. 17.

Gensler spoke in depth about how AI can manipulate markets and investors, warning against “AI washing,” algorithm bias and more. As the conversation began, Gensler also clarified that the topics he would be addressing would only pertain to traditional financial markets. This was evident after the panel moderator asked Gensler if he “coined” the term AI washing, to which Gensler responded, “Well, you said ‘coin’ and this is a crypto free day, so it’s not that.”

Gensler warns about AI fraud and manipulation

Gensler then proceeded to explain that AI washing refers to investor or employee interest occurring when making claims about a new technology model. “If an issuer is raising money from the public or just doing its regular quarterly filing, it’s supposed to be truthful in those filings,” he said. According to Gensler, the SEC has found that when new technologies come into play, issuers must be truthful about their claims, detailing the risk and how those risks are being managed and mitigated.

The SEC Chair also noted that because AI is built into the fabric of financial markets, there needs to be ways to ensure that humans behind these models are not misleading the public. “Fraud is fraud, and people are going to use AI to fake out the public, deep fake, or otherwise. It’s still against the laws not to mislead the public in this regard,” said Gensler.

Gensler added that AI was to blame for a fake blog post that announced his resignation in July of 2023. Most recently, the SEC’s official X account was associated with fake news noting the approval of a spot Bitcoin exchange-traded fund (ETF), which Gensler attributed to a system hack rather than AI.

The @SECGov twitter account was compromised, and an unauthorized tweet was posted. The SEC has not approved the listing and trading of spot bitcoin exchange-traded products.

— Gary Gensler (@GaryGensler) January 9, 2024

In addition, Gensler mentioned that AI has enabled a new form of “narrowcasting.” According to Gensler, narrowcasting allows AI-enabled systems to target specific individuals based on data from connected devices. While this shouldn’t come as a surprise, Gensler warned that if a system puts a robo advisor or broker dealer interest ahead of investor interest, a conflict arises.

Such issues may also lead to concerns around algorithm bias. According to Gensler, AI can be used to select things like which resumes to read when applicants apply for jobs, or who may be eligible for financing when it comes to buying a home. This in mind, Gensler pointed out that data used by algorithms can reflect society biases. He said:

“There are a couple of challenges embedded in AI because of the insatiable desire for data, coupled with the computational work, which is multidimensional. That math behind it makes it hard to explain the algorithm’s final decisions. It can predict who has better credit, or it might predict to market in one way or another, and how to price products differently. But what if that is also related to one’s race, gender, or sexual orientation? This is not what we want, and it’s not even allowed.”

While this presents a challenge, Gensler pointed out that this will be resolved over time due to the fact that humans are setting the hyperparameters behind algorithms. For instance, Gensler noted that individuals must take on the responsibility of understanding why AI-based algorithms perform in certain ways. “If humans can’t explain why, then what responsibilities do you have as a group of people deploying a model to understand your model? I think this will play out in a number of years, and ultimately into the court system,” said Gensler.

Centralized AI poses risk for financial sector at large

All things considered, Gensler commented that he thinks AI itself is net positive to society, along with the efficiency and access it can provide in financial markets. However, Gensler warned that one of the biggest looming risk to consider with AI is centralization. “The thing about AI is that everything about it has similar economics of networks, so that it will tend toward centralization. I believe it’s inevitable that we will have measured on the fingers of one hand, large based models and data aggregators,” he said.

As a result of very few large based models and data aggregators coming into play, Gensler believes that a “monoculture” will likely occur. This could mean that hundreds or thousands of financial actors may rely on a central data or central AI model. Gensler further pointed out that financial regulators don’t have oversight to central nodes. He said:

“The whole financial sector indirectly will be relying on those central nodes, and if those nodes have it wrong, the monoculture goes one way. This places risk in society and on the financial sector at large.”

Gensler added that he will continue to raise awareness amongst his international colleagues about the challenges around AI and the impact these will likely have on traditional financial systems. “The awareness is rising, but I will stick to our lane of financial services and securities law,” he said.

This news is republished from another source. You can check the original article here